Well, that is what this guy claims...

It's not gravity you see -- it's because fluids magically seek their own level.

There is just one little problem... When you remove gravity the fluids no longer know which way is down and no longer have the magic to 'seek their level' just due to air pressure.

So he's just Flat Out Wrong.

Monday, August 29, 2016

Thursday, August 25, 2016

Flat Earth Follies: LAX to LHR Flight Path Takes You Near Iceland

YouTuber 'Flat Earth Addict' (a good term for them, they know it's bad for them but they can't quit) wonders why a flight going from the UK to LA passes near Iceland?

NO DUH

BECAUSE THAT IS CLOSE TO THE SHORTEST PATH ON THE GLOBE

Because both points are well into the Northen Hemisphere his line on Gleason's azimuthal equidistant projection (projection FROM A GLOBE, LOL). Beyond that point the Gleason map IS TERRIBLE -- sizes, distances, compass headings, everything is far worse than on the Mercator projection. The ONLY thing accurate on the azimuthal equidistant projection is distances TO THE CENTER POINT. All other distances are WRONG and they get more and more WRONG as you go further 'South' (towards his outer edge).

Notice how the paths are ALMOST identical? What is this guy trying to prove exactly?

You can find the story, such as it is, here:

Why did American Airlines flight travel 1,000 MILES back to London instead of landing in Iceland after medical emergency?

and a little more detailed here. (sounds like maybe aerotoxic syndrome)

All this proves is that they likely were close to Iceland which fits perfectly well with the Spherical Model Earth.

FlightAware shows the actual flight path on the Mercator projection with a dotted line taking it a little closer to Iceland than a straight-line distance -- this is not usual for many reasons including safety, flight congestion, and legal. The line isn't straight on the Mercator projection because it PURPOSEFULLY distorts areas away from the Equator - BUT it does this to preserve compass directions.

Flat Earth Follies: The Horizon Always Rises To Eye Level

The Horizon Always Rises To Eye Level

How did you measure that? Doesn't look LEVEL to me. It's a few degrees below which is EXACTLY what you would expect on a spherical Earth of 3959 miles radius from a high altitude.

From this video:

Wednesday, August 24, 2016

General Recommendation: Learn Some Physics

If you want to know more about Physics

Richard Feynman - The Character of Physical Law

The first few entries in that playlist cover the basics of how we know what we know about Gravity.

Watch some physics classes 8.01 Classical Mechanics

Covers all the basics of Classical Mechanics

From there - you can check out more classes in iTunesU (iTunes University) and many other online resources.

Tuesday, August 23, 2016

Flat Earth Follies: PI is 3 because the Bible says so.

I would like to believe that this is a joke... A YouTuber named 'Awake Souls' claims that PI is really maybe 4 or probably 3, because the Bible says so mainly.

It's a 12 minute tour de force of nonsense and non sequiturs that rambles between ancient history, conspiracy theory, Biblical appeals, and Indiana Law from 1897 (HB 246).

But let's just take a quick look at what PI actually is and why it is certainly neither 4 nor 3.

PI is JUST the ratio between the circumference of a circle and the diameter of that circle. That's it, there is nothing magic about it. All circles, no matter their size, have the same ratio. Which is pretty cool because it means that if we know the radius of the circle we can figure out the circumference and area also, or vice versa. All it really means there is a relationship between these measurements.

Finding a close approximation of PI is very easy.

Just find something that is very round and then take a string that doesn't stretch (fine thread works) and wrap it around your round cylinder (a tube or pipe that is uniform works, the larger the better) and mark it exactly once around -- measure that length with a finely marked ruler. That is the Circumference. You can also try to "roll" it along a flat surface and carefully mark & measure "once around".

Now measure the Diameter of the outside of that cylinder very carefully - just make sure you go as straight across as possible.

Now divide the length of the string by the diameter of the cylinder: C / D or Circumference / Diameter.

That's it - you have an approximation of Pi and it will very clearly be neither 3 nor 4.

Depending on how accurately you measured you'll find it's pretty close to 3.14 etc etc. The more accurately you measure it, the closer you'll get to 3.14159265 etc etc

I used a soda can. I cut a strip out of it so I could flatten that strip and measure it, it was ~207.5mm. I then measured across the can and got 66mm; 207.5/66 = 3.1439...

Beverage Can wiki says it's actually 2.6" wide which is ~66.04mm, which gives us: 3.1420 - a very good approximation without any hard work. I did this in under 5 minutes. We're far enough from 3 that PI is clearly not 3 - and we're very far from 4 so that's just ridiculous.

I went back today and remeasured everything in inches and took pictures. This time I got approximately 8 5/32" by 2.6" which gives me a ratio of 3.137. Even though measuring things super accurately is hard, we are nowhere even near 3.

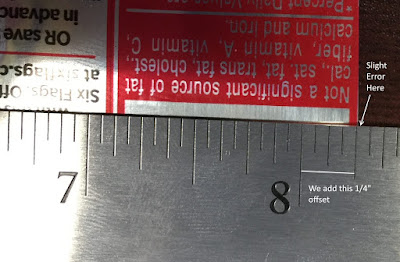

Little bit of error introduced here because the edge didn't quite line up:

For our circumference, I laid the strip out flat and measured it - was a little off here:

And on the other end we have 1/32nd inch marks (that's why I shifted it by 1/4"):

Can you get closer? Try to borrow some very accurate calipers and see.

And it's also very easy to verify that doesn't matter if you measure in millimeters, inches, or anything else. The ratio doesn't depend on the units used -- just make sure you use the same units when you measure (or convert them to the same units).

You can also get it using an approximation of PI using the function |sqrt(1-x^2)| which gives you an area of π/4 over the range x = 0 to x = 1 (because it makes a little 1/4 circle).

Take few values and just treat them like little rectangles and add up the area - like this image:

I did a little example in Excel, I use the average point between each sample for my height and each width is 0.1 units. Here are the Excel details so you can recreate it. I'm just doing one quarter of the circle - same function as above but x just goes from 0 to 1.

This gives us the following calculation:

All we've done here is add up all the little areas of each rectangle.

We're off, as expected, a little bit because our pieces are too big. Break those up into 100 pieces and you get much closer (I got 3.140417032). Break it up into 1000 pieces and you get even closer. So you are adding up smaller and more accurate pieces -- when you get to an infinite number of pieces you'll get the exact value of PI. But it doesn't even matter what the EXACT value of PI is here -- the point is it just absurd to say it's 3.

There are lots of other ways to approximate Pi

But let's just take a quick look at what PI actually is and why it is certainly neither 4 nor 3.

What is PI?

PI is JUST the ratio between the circumference of a circle and the diameter of that circle. That's it, there is nothing magic about it. All circles, no matter their size, have the same ratio. Which is pretty cool because it means that if we know the radius of the circle we can figure out the circumference and area also, or vice versa. All it really means there is a relationship between these measurements.

Approximating PI

Finding a close approximation of PI is very easy.

Just find something that is very round and then take a string that doesn't stretch (fine thread works) and wrap it around your round cylinder (a tube or pipe that is uniform works, the larger the better) and mark it exactly once around -- measure that length with a finely marked ruler. That is the Circumference. You can also try to "roll" it along a flat surface and carefully mark & measure "once around".

Now measure the Diameter of the outside of that cylinder very carefully - just make sure you go as straight across as possible.

Now divide the length of the string by the diameter of the cylinder: C / D or Circumference / Diameter.

That's it - you have an approximation of Pi and it will very clearly be neither 3 nor 4.

Depending on how accurately you measured you'll find it's pretty close to 3.14 etc etc. The more accurately you measure it, the closer you'll get to 3.14159265 etc etc

Coke Can Method

I used a soda can. I cut a strip out of it so I could flatten that strip and measure it, it was ~207.5mm. I then measured across the can and got 66mm; 207.5/66 = 3.1439...

Beverage Can wiki says it's actually 2.6" wide which is ~66.04mm, which gives us: 3.1420 - a very good approximation without any hard work. I did this in under 5 minutes. We're far enough from 3 that PI is clearly not 3 - and we're very far from 4 so that's just ridiculous.

I went back today and remeasured everything in inches and took pictures. This time I got approximately 8 5/32" by 2.6" which gives me a ratio of 3.137. Even though measuring things super accurately is hard, we are nowhere even near 3.

Little bit of error introduced here because the edge didn't quite line up:

Another small amount of error introduced because my cut across the can wasn't perfect so it's slightly tilted:

For our circumference, I laid the strip out flat and measured it - was a little off here:

And on the other end we have 1/32nd inch marks (that's why I shifted it by 1/4"):

Can you get closer? Try to borrow some very accurate calipers and see.

Units Don't Matter to PI

And it's also very easy to verify that doesn't matter if you measure in millimeters, inches, or anything else. The ratio doesn't depend on the units used -- just make sure you use the same units when you measure (or convert them to the same units).

Approximating PI in Excel By Adding Up Little Rectangles

You can also get it using an approximation of PI using the function |sqrt(1-x^2)| which gives you an area of π/4 over the range x = 0 to x = 1 (because it makes a little 1/4 circle).

Take few values and just treat them like little rectangles and add up the area - like this image:

I did a little example in Excel, I use the average point between each sample for my height and each width is 0.1 units. Here are the Excel details so you can recreate it. I'm just doing one quarter of the circle - same function as above but x just goes from 0 to 1.

| Field | Excel Formula |

|---|---|

| |sqrt(1-x^2)| | =SQRT(1-(A2^2)) |

| Area: w x h | =(ABS(A3-A2)*ABS((B2+B3)/2)) |

| area*4 | =SUM(C3:C12)*4 |

This gives us the following calculation:

| x | |sqrt(1-x^2)| | Area: w x h |

|---|---|---|

| 0.0 | 1.00000000 | |

| 0.1 | 0.99498744 | 0.099749372 |

| 0.2 | 0.97979590 | 0.098739167 |

| 0.3 | 0.95393920 | 0.096686755 |

| 0.4 | 0.91651514 | 0.093522717 |

| 0.5 | 0.86602540 | 0.089127027 |

| 0.6 | 0.80000000 | 0.083301270 |

| 0.7 | 0.71414284 | 0.075707142 |

| 0.8 | 0.60000000 | 0.065707142 |

| 0.9 | 0.43588989 | 0.051794495 |

| 1.0 | 0.00000000 | 0.021794495 |

| Area*4 | 3.104518326 |

All we've done here is add up all the little areas of each rectangle.

We're off, as expected, a little bit because our pieces are too big. Break those up into 100 pieces and you get much closer (I got 3.140417032). Break it up into 1000 pieces and you get even closer. So you are adding up smaller and more accurate pieces -- when you get to an infinite number of pieces you'll get the exact value of PI. But it doesn't even matter what the EXACT value of PI is here -- the point is it just absurd to say it's 3.

There are lots of other ways to approximate Pi

Monday, August 22, 2016

Flat Earth Follies: a curve cannot reflect a line/column of light

Several Flat Eathers now have tried to claim that a 'column of light' cannot possibly form on a convexly curved Earth - which is just ridiculous but here we go… all will become clear.

This one I personally took on a sharply convexly curved overpass. Clear columning is observed along the convexly curved surface.

Here we observe it with sunlight all the way over the very clearly convexly curved wave!

And here is a diagram showing why.

Analysis: Apple Pie Hill to Philadelphia

This is a review of the view from Apple Pie Hill to Philadelphia done by professional surveyor Jesse Kozlowski, executed using a Wild Heerbrugg theodolite (Video).

Technical Data

Observation Point (Apple Pie Hill):

Target (Comcast Center Tower):

Ground: 204'

Tower: 46'

Total: 250'

Target (Comcast Center Tower):

Ground: 42'

Height: 973'

Top: 1015'

Zenith Angle: 89.95389° [89° 57' 14"] // ~166 arcseconds

Distance: 171281' (~32.44 miles)

Instrument (Wild Heerbrugg theodolite):

I do not know exactly which model of this theodolite was being used but looking at several that looked similar I'll estimate that we have a level accuracy of 30" which gives us about +/- 25 feet at this distance. But the specifications for these theodolites indicate that they are good for viewing objects about 12 miles away, so this would introduce additional error in our measurements as the angular size of objects over 32 miles away would be reduced, so roughly we might expect 24.912*(32/12) = +/- 66.432'

With better information here we might be able to tighten up our errors bars a little bit but I think I show below that this doesn't matter and greater accuracy would make the Flat Earth hypotheses even LESS likely.

Analysis Flat Earth

On a Flat Earth model would have expected the distant level point to be even with our observation point at 250', with error bars that means we should see 765'+/-66.432' of the tower sticking out above our level mark. This is well outside the range of even our very generous error bars.

Analysis Curved Earth

Therefore, on a globe, we would expect that extending out a line that is level from our viewpoint on Apple Pie Hill, the elevation in Philadelphia would be the amount of curvature 'drop' (701.74' / 601.49') plus our elevation (250'), or somewhere between 851.49' to 951.74' at the Comcast Center Tower in Philadelphia. That would leave 163.51'+/- 66.432' to 63.26'+/-66.432' above our level mark at the tower.

Based on the zenith angle measurement of 166 arcseconds we calculate the top is an estimated [g = 2r*tan(α/2)] = 138.8' above the level mark - this agrees with the visual mark on the tower so that seems more accurate (indeed the angle measurement accuracy on this theodolite is cited as being 1" so a higher accuracy in this measurement makes sense).

That's a pretty big error bar but our 138.8' is entirely consistent with the view we see with only moderate refraction and accuracy considerable better than worst case.

Conclusion

So, this shows that this view is entirely compatible with a curved Earth of 3959 miles radius and is completely incompatible with a Flat Earth model.

Sunday, August 21, 2016

Flat Earth Follies: Moonlight is cold light

I can't even believe we have to have this conversation but apparently we do.

I have a Tsing 300 infrared thermometer that I use for both cooking and A/C analysis around the house. It's an inexpensive model but gets the job done. It reads a 1" spot at 1' - I took readings at this distance.

So I went outside on a heavily cloudy night (so there was absolutely no moonlight to magically cool things down) and started testing the temperature of various objects in various locations.

First object is a painted railing inside a stairwell. Still night air, all measurements taken on parts of the rail shaded from the two 60 watt bulbs that are pretty far away.

Bottom: 69 degrees

Middle: 70.7 degrees

Top: 75.1 degrees

So height seemed to matter a lot (for this spot, we can't assume this always holds), 6 degree increase over approximately 19.25 feet. So that's one possible source of error.

Down at ground level, far away from any building or lights I then took several measurements of the ground.

Open to the sky: 69.4

Just in shade of tree: 69.9

Ground closer to tree: 70.4

So NO moon light and it's warmer under the tree at night. But if I ONLY took these same measurements in moonlight I might incorrectly attribute this to the moonlight. That would be a FALSE conclusion.

Next, I walked around an area equally open to the sky and took ground temperature measurements over an approx 50' circumference, I got:

78.6

77.9

77.5

77.6

77.9

77.4

73.4

75.4

75.2

76.2

76.3

75.9

77.6

77.3

78.6

So every few feet I got different readings, up to a 5 degree swing, even a 4 degree swing in two spots not far from each other.

So this tells me that even very local conditions can vary greatly and magical 'lunar light cooling' has NOTHING WHAT-SO-EVER to do with it.

I was also able to easily reproduce it being warmer under a tree without any moonlight so it doesn't matter WHY it can be warmer under something than in the open air, we know that moonlight cannot be assumed to be the cause.

It could be biological activity, it could reduce the effects of wind, it could be higher humidity, or all of the above. Every spot is probably different.

In short, people claiming the moonlight has a cooling effect failed to have any control data.

This is why science exists - to study the ways we FAIL and make sure to avoid repeating those errors in the future. Sadly our Flat Earth friends eschew science and so repeat even the most gratuitous failings of our past.

This failure is the very tempting Post hoc ergo propter hoc fallacy, "I moved the thermometer out of the moon light and the temperature increased, therefore the moonlight was cooling it down!" but this experiment clearly shows no such thing.

Friday, August 19, 2016

Flat Earth Follies: Why Don't The Days Shift By 12 Hours Between Summer and Winter?

Why Don't The Days Shift By 12 Hours Between Summer and Winter?

Flat Earthers LOVE to post little memes with low information content, for example...A very good question. However, the answer is very simple.

THEY DO.

Sidereal versus Synodic

One exact rotation of the Earth is called a sidereal day which is a period of 86164.09054 seconds or 23 hours, 56 minutes, 4.09054 seconds. This is measured as the Sun being in the same relative position as the distant stars.However, the '24 hour' period we all know and love is called a synodic day or 'mean solar day', this is the average amount of time from Noon to Noon and it is literally just a little bit more than one full rotation of the Earth to make up for our motion around the Sun. Our orbit around the sun isn't a perfect circle so the actual length of a day varies slightly but on average it is 86400.002 seconds -- this is why Noon is still Noon from Summer to Winter.

And if you look at the stars just before Sunrise you'll notice that they shift by this amount every day, about 236 seconds.

Remember that it was the ancient Sumerians who defined the meaning of '24 hours' (and the division into hours and seconds) based on the Sun being back in the same position as the previous day -- which automatically includes the time shifting due to our motion around the Sun even though they didn't know about it. Their version wasn't as accurate as ours but we still use their nomenclature because it works for humans. Noon to Noon is an easy concept to grasp.

Now let's do a little check.

The daily difference between the two is 86400.002-86164.09054 or approximately 235.91146 seconds per day (average!) so we should expect somewhere right at +1 sidereal day over the year.

Number of days in 1 tropical year is ~365.242188792

235.91146s * 365.242188792 = 23 hours 56 minutes 4.82 seconds !!!

So I'm within 3/4 of a second with my estimate, I feel pretty good about that.

So that's why Noon is still Noon Winter, Spring, Summer, and Fall.

However, even the distant stars aren't fixed - they just appear to move very slowly because of their great distance. But the very distant stars they take tens of thousands to millions of years to move very much in our view so they are 'fixed enough'.

But with our closer neighboring stars we can observe this more directly, such as with Barnard's star:

Tuesday, August 16, 2016

Flat Earth Follies: Nearby Sun Impossible

Reasons Why Nearby Sun Is Impossible

Parallax

A nearby Sun would produce a profound amount of parallax motion that would be obvious to a moving observer or observers in different locations.

Parallax motion is like when you drive down the road and the nearby trees appear to move through your field of view very rapidly, slightly more distant objects move more slowly, distant clouds move even more slowly, and a sun that is only some 3000 miles away would move as well.

But we observe the sun remaining in the same place even when viewed from fairly distant locations at the same time.

The amount of angular difference we should see in a 3000 mile distant sun for even just 100 miles between observers would be 1.9°! The sun is only 0.5° across in the sky so this would be almost FOUR solar distances shifted. This would be trivial to observe but in actuality the angle difference is too small to be measured without extremely specialized and costly equipment (less than one quarter of an arcsecond for observers 100 miles apart).

As you move further apart you also move around the curvature of the Earth so you start to measure the angle of curvature in addition to the angle to the Sun, we deal with this in Height of Sun.

Speed of Sun

With a nearby Sun it would also be impossible to maintain the same apparent motion of the Sun in winter time as in summer because the Tropic of Capricorn would be a much greater distance around than the Tropic of Cancer. So the Sun would either have to go a lot faster or it would take a lot longer to go all the way around.

Let's see -- the Equator is 24,901 miles around that would mean a Flat Earth distance to the North Pole of 24,901/(2π) = 3963.12 miles -- since 22.5° is 1553 miles further that gives us a circumference of 5516.12(2π) = 34658.8 miles.

So the sun would have to go 39% faster to keep the length of a day the same but #1 days stay 24 hours year around and #2 nobody notices the Sun racing overhead in the winter. So this Flat Earth model is impossible.

Height of Sun

From a right triangle we can calculate the height a if we have the distance b and angle α using the equation:

a = b × tan(α)

Some people get confused by the tangent function but this function is nothing but SIMPLE division because tan(α) means nothing more than taking the side opposite our angle α - which would be side a, divided by the side that is adjacent to our angle α - which is side b - so it's nothing more than tan(α) = a / b. You might also recognize (a / b) as being RISE/RUN, or the slope of a line and this is exactly what it is!

So this means these are all saying exactly the same thing:

a = b × SLOPE

a = b × tan(α)

a = b × (a/b)

This isn't complex or scary math. It just means that the angle and the slope are really the same thing and we can convert between them. They are just expressed in different units.

From this you can also see why when a and b are equal in length then a/b would be 1 and sure enough, tan(45°) = 1 -- this value 1 is the slope of the line. So 45° just means the slope is 1.

IF the Earth was flat then we could use this formula to find the height of the Sun!

Street Lamp Example

Let's take a simple example first:

Standing at point C (from our diagram above) a street lamp is directly overhead. We walk 10 meters to point A and find that our angle α is 45° - 10 meters * tan(45°) = 10 meters so our light is 10 meters up (remember tan(45°) = 1 so this is trivial). Easy enough. Now let's walk out to 20 meters away from C. Since we doubled our distance that means our slope is one-half:

Now we find the height of the lamp is 20 * 0.5 -- this formula still gives us 10 meters. We didn't even need to use trigonometry because we already know the slope of the line - but we find the angle is 26.5650512°

No matter what distance you pick you can find the SAME height by multiplying in your distance times the slope of the line (or finding the slope from the angle using tan(α)).

Does This Work on Earth?

The question now is, does this actually work when we measure the Sun from Earth? If the Earth is curved then our segment b is curved and this function will NOT give a consistent value for the height of the Sun because the angles will be off because our horizon would be rotating along with us as we moved further North, like so:

In this case our 45° is REALLY 90° because our horizon is tilted by about 45°

So let's take some measurements at different latitudes and try to calculate the height of the Sun from those.

If we look at data from the 2016 Vernal Equinox which occurred at Sun, 20 Mar 2016 04:29:48 UTC We find that the longitude where the sun is directly overhead is at Longitude: 114° 24' 6.8394" (or 114.4019 in decimal form). From here we can find the angle of the sun from the horizon at various latitudes, and we note that every 10° latitude is about 1111 km. If you prefer to use more exact values you can find them using the Great Circle distance calculator, I recommend the WGS84 data as the most accurate. The distance between latitude lines is not exactly equal because the Earth is a very slightly oblate spheroid and not a perfect sphere. However, the differences are small enough that they don't really matter for what we are doing here.

Here is our data... isn't it funny how the angle of the sun is right at 90° minus our latitude? This would NOT be the case on a Flat Earth. If the Earth was flat the angles would be slowly approaching 26.565° (from our streetlight example we know that if we double the distance it should make the slope one half) rather than approaching 0° and we would get a constant Height back as we did with the streetlamp.

Reminder, our height column here is given by our formula: a = b × tan(α)

| Latitude | Observed Angle Of Sun At Equinox | Distance from Equator (miles) | Which Height Is It? |

|---|---|---|---|

10° | 80° | 690.3 | 3915.1 |

20° | 70.01° | 1380.0 | 3793.6 |

30° | 60.01° | 2070.3 | 3587.4 |

40° | 50.01° | 2760.7 | 3291.2 |

45° | 45.02° | 3105.2 | 3107.3 |

50° | 40.02° | 3450.3 | 2897.2 |

60° | 30.03° | 4140.0 | 2393.1 |

70° | 20.04° | 4829.6 | 1761.7 |

80° | 10.09° | 5516.5 | 981.7 |

89° | 1.36° | 6119.2 | 145.3 |

But with the sun angles we observe the Flat Earth model clearly does not work.

It is Impossible for the Sun to be at 3915.1 miles and 145.3 miles up at the same time and those values are so far apart it cannot be simple measurement error. It is just fundamentally wrong.

The Earth is curved.

Monday, August 15, 2016

V2 rocket footage from 1948

I've seen a lot of Flat Earthers pointing to the V2 footage and proclaiming 'THERE IS NO CURVE!'

Yeah, that looks pretty flat all right. I guess I should give up...

Oh my...

So which is it Flat Earth? Are these evidence or not?

And a large, professionally stitched version from reddit:

Because we all know that looking at one, isolated, narrow FOV image proves the Earth is flat - even though we know from Visually Discerning the Curvature of the Earth and my post on high-altitude balloon footage that we require a wider field of view to even expect to see the curvature. This is how Flat Earth arguments 'work' -- for some odd reason, you have to look at only their evidence without considering all the evidence.

What do those other frames look like?

|

| credit: NASA |

Oh my...

So which is it Flat Earth? Are these evidence or not?

And a large, professionally stitched version from reddit:

The numbers I get for 65 miles gives us a ground distance to the horizon of 712 miles (and viewer distance of 720 miles). With an apparent FOV of 60°/80° for each of the individual photos we get sagitta of 94.9/165.8 miles and a viewing angle for that much sagitta of just 0.1°/0.35° -- which would be just a few pixels per frame (my image is 419 pixels, divide by 80 to get pixels per degree, multiplied by 0.35 degrees = 1.8 pixels). You can also see how that 165.8 miles is almost completely on edge to the viewer because it is curving down and away from us, this makes it almost impossible to see any detail in that thin line of horizon.

As a reminder, this angle is the viewing angle from D to E below (Z to horizon₀ in the side view).

Which is what we find when we look carefully at frames where the horizon most closely passes near the center of the lens so they have the least amount of distortion:

The totally of the images seem to cover slightly more than 180° of view which means the stitched panorama is actually flattened out and should curve even more.

See Also: Air & Space: First Photo From Space

Friday, August 12, 2016

Nikola Tesla - Earth Circumference

"As one-half the circumference of the earth is approximately 12,000 miles long there will be, roughly, thirty deviations." ~Nikola Tesla

Tuesday, August 9, 2016

Flat Earth Follies: High Altitude Balloon footage PROVES Flat Earth

Well... no, not even a little bit...

Almost all the footage from high-altitude balloons shows the apparent curvature fluctuating from

If we were looking through such a lens straight at a square grid of lines the resulting image would look something like this:

The middle portions (vertically and horizontally) are expanded outwards from the center so our straight lines appear to be bowed the further from the center you get, exactly as we saw in the first frame when the horizon was below center and it was bowed downwards.

This means that when the horizon is at or below the center line and you can still see positive curvature, then you can be sure there is actual curvature.

One thing we can do is try to find a frame where the peak of the horizon goes through the center of the lens, to minimize distortion, and is fairly level. And since the downward curved horizon would entirely fall in the lower half of the frame, the distortion here is actually making the curvature appear flatter rather than exaggerating it. Once you understand that you are already seeing less curvature than is actually present in such an image, you really shouldn't need any further analysis to know that this shows positive curvature.

At the lower altitudes we all know that it will at least appear very flat and be difficult to measure¹, as it should be given the size of the Earth. The visual curvature at this point would be mere fractions of a degree so we're going up to around 100,000 feet to get a better view.

For this detailed analysis I'm using the raw RotaFlight Balloon Footage from around 3:22:50 because they documented the camera details, the horizon is pretty sharp, and this footage is available at 1080p (an earlier version of this page used the Robert Orcutt footage but it was 720p, I didn't know which camera or settings he used, and the horizon wasn't very sharp in that footage). The camera used in the RotoFlight footage was a GoPro Hero3 White Edition in 1080p mode. From the manual I know that 1080p on this camera only supports Medium Field of View which is ~94.4°. The balloon pops almost 4 minutes later (at 3:26:29) so we should be up around 100,000' in this frame.

I marked the center of the frame with a single yellow pixel, so you can see the horizon is either at or maybe 1 pixel below that mark. There are 38 pixels between the two red lines (both of which are 1 pixel high and absolutely straight across the frame) which mark the extents of the peak of our horizon and where the horizon hits the edge of the frame (±2 pixels as the horizon isn't perfectly sharp due to the thick atmosphere along the edge).

Common sense should tell you that, because of the enormous size of the Earth compared to our view, we would expect the visible curvature to still be very slight, even at 100,000 feet, especially with a more narrow Field of View as shown when we crop this image:

Our horizon peak (P) is therefore found directly ahead (x=0), down at the horizon circle plane (y=H), and at a distance Z from our camera. This is not the total distance (D) from camera to point P but rather only the z-axis distance (Z).

P = [0, H, Z]

Which projects into 2D as:

P" = [0/Z, H/Z]

P" = [0, H/Z]

So far, very simple.

⎡x⎤ ⎡ 1 0 0 ⎤

Given an angle u the equations to transform x, y, and z coordinates are:

x' = x

y' = y*cos(u) - z*sin(u)

z' = y*sin(u) + z*cos(u)

Since we are 'looking down' we need to rotate 'up' so our angle will be positive and equal to Horizon Dip to bring P right into the center of our frame at [0,0].

Our point P starts at [0, H, Z] and we need to rotate point P to be straight ahead, so our u angle:

u = atan(H/Z) = atan(D/R)

x' = 0

y' = H*cos(u) - Z*sin(u) = 0

z' = H*sin(u) + Z*cos(u) = D

P' = [0, 0, D]

and project into 2D:

P" = [0/D, 0/D]

P" = [0, 0]

That was easy because the rotation exactly cancels out the previous H/Z slope. But it's a good check that our rotation was in the desired direction.

And next we need to rotate point B, we start out in the same place as before (this introduces a small error because our rotated FOV would intersect the horizon circle at a slightly different point, I'm assuming a small rotation so the error is negligible and we can ignore this term).

B = [Z*sin(a/2), H, Z*cos(a/2)]

And we rotate by matrix multiplication, for our small angles the cos(u) term will the majority of the value, and we shift a tiny bit into sin(u).

x' = Z*sin(a/2)

y' = H*cos(u) - (Z*cos(a/2))*sin(u)

B' = [Z*sin(a/2), H*cos(u)-(Z*cos(a/2))*sin(u), H*sin(u)+(Z*(cos(a/2))*cos(u)]

and project into 2D by dividing x and y by z:

B" = [(Z*sin(a/2))/(H*sin(u)+(Z*(cos(a/2))*cos(u)), (H*cos(u)-(Z*cos(a/2))*sin(u))/(H*sin(u)+(Z*(cos(a/2))*cos(u))]

This is why you need special video cards to play games at high frame rates... and we've only rotated one axis.

H*cos(u) - (Z*cos(a/2))*sin(u)

Δy = -------------------------------

H*sin(u) + (Z*cos(a/2))*cos(u)

Divide Δy by 2 times B" rotated x-extent (x/z) to get our ratio (I multiply by the inverse here):

H*cos(u) - (Z*cos(a/2))*sin(u) H*sin(u)+(Z*(cos(a/2))*cos(u)

------------------------------ * -----------------------------

H*sin(u) + (Z*cos(a/2))*cos(u) 2*Z*sin(a/2)

We can see that our lens, altitude, and FOV are all very important to figuring out what we expect to see and knowing what to expect is critical in evaluating what we actually see - especially through the eye of a camera lens. And that just because the camera image has some distortion it doesn't mean the photo tells us nothing about the scene. Indeed, this image very clearly shows the curvature despite the distortion working against it.

If you want to measure the curvature you have to be very careful and pretty high up.

And while 35,000' sounds pretty high up, here is what that looks like:

Online Lens Correction photo-kako

Howto Remove HERO3 Lens Distortion: youtube

PTLens

ImageMagick: Correcting Lens Distortions in Digital Photographs

Here is our image with correction applied to 'defish' the image and you can clearly see the inverse effect it has in the 'pincushion' appearance of the previous straight-lines (the progress bar and text). But as you can see, our curvature remains clearly pronounced because there is very little distortion in the center of the lens and because we've placed all of our horizon below center the evil 'fish-eye' was actually slightly flattening out the real curvature.

There it is folks -- the curvature of the horizon at ~100,000'

Of course, this only demonstrates that our horizon is a circle of a radius entirely consistent with the oblate spheroid model of the Earth. What we have done here is calculate how big we would expect that circle to be given our altitude above a spheroid and how that should appear in a camera lens given some field of view.

Measurements and observations can only lend weight to any model, they never "prove" it, as Flat Earthers are so fond of claiming. Rarely is this more clear than the observation that just because a photo makes it look flat (or curved), doesn't mean it is actually flat (or curved). It just means the curvature is hard to measure, especially at lower altitudes (in this case, due to the immense size of the Earth) and in the face of lens distortion. But we have taken these into account in this analysis so we can be fairly sure we are seeing actual curvature of the horizon.

But I see no way that this view would be consistent with a Flat Earth model, there is no known physics that would explain the observed size of this observed horizon on a Flat Earth. We are well above the majority of the atmosphere here so we would be able to see MUCH further if the plane below us were flat.

Not So Fast

Almost all the footage from high-altitude balloons shows the apparent curvature fluctuating from

...concave (when the horizon line is below center of the lens)

...to convex (when the horizon line is above center)

...to flat?

This effect is called 'Lens Barrel' and is almost unavoidable when you are using the wider-angle lenses common for this purpose, especially on cameras such as the popular GoPro series. Lenses that try to compensate for this are called Rectilinear lenses but it's more difficult, and more expensive, to make a wide-angle Rectilinear lens.

I marked a big X on the last frame to help us find the center of the image (the other two are obviously far from center). What we find is that the closer the horizon line is to the center of the lens the less distortion there is.

All three of these images are from the same video, the same camera, and the same lens. They are all distorted exactly the same way. This is just to demonstrate that the area in the center of the lens has less distortion and to show clearly the direction of that distortion.

...to convex (when the horizon line is above center)

...to flat?

This effect is called 'Lens Barrel' and is almost unavoidable when you are using the wider-angle lenses common for this purpose, especially on cameras such as the popular GoPro series. Lenses that try to compensate for this are called Rectilinear lenses but it's more difficult, and more expensive, to make a wide-angle Rectilinear lens.

I marked a big X on the last frame to help us find the center of the image (the other two are obviously far from center). What we find is that the closer the horizon line is to the center of the lens the less distortion there is.

All three of these images are from the same video, the same camera, and the same lens. They are all distorted exactly the same way. This is just to demonstrate that the area in the center of the lens has less distortion and to show clearly the direction of that distortion.

So it should be clear that you cannot just pick any frame from a video and declare the Earth to be flat or curved. You have to analyze what you see so you can draw more accurate conclusions.

If we were looking through such a lens straight at a square grid of lines the resulting image would look something like this:

|

| Figure 1. Curvilinear distortion, credit Wikimedia Commons |

This means that when the horizon is at or below the center line and you can still see positive curvature, then you can be sure there is actual curvature.

And, with the horizon below the center line, we would also expect the measured "apparent curvature" to be slightly less than the actual curvature due to this lens distortion. However, the edges are less distorted than the middle portion so under normal conditions the distortion should be small.

We really only care about two points, the peak of the horizon and where the horizon meets the edge of the frame.

We can also use the properties of the lens to reverse the distortion introduced, as I've done here with the first two images.

We really only care about two points, the peak of the horizon and where the horizon meets the edge of the frame.

We can also use the properties of the lens to reverse the distortion introduced, as I've done here with the first two images.

|

| Frame from Robert Orcutt footage, corrected for lens distortion |

|

| Frame from Robert Orcutt footage, corrected for lens distortion |

How to proceed?

One thing we can do is try to find a frame where the peak of the horizon goes through the center of the lens, to minimize distortion, and is fairly level. And since the downward curved horizon would entirely fall in the lower half of the frame, the distortion here is actually making the curvature appear flatter rather than exaggerating it. Once you understand that you are already seeing less curvature than is actually present in such an image, you really shouldn't need any further analysis to know that this shows positive curvature.

At the lower altitudes we all know that it will at least appear very flat and be difficult to measure¹, as it should be given the size of the Earth. The visual curvature at this point would be mere fractions of a degree so we're going up to around 100,000 feet to get a better view.

For this detailed analysis I'm using the raw RotaFlight Balloon Footage from around 3:22:50 because they documented the camera details, the horizon is pretty sharp, and this footage is available at 1080p (an earlier version of this page used the Robert Orcutt footage but it was 720p, I didn't know which camera or settings he used, and the horizon wasn't very sharp in that footage). The camera used in the RotoFlight footage was a GoPro Hero3 White Edition in 1080p mode. From the manual I know that 1080p on this camera only supports Medium Field of View which is ~94.4°. The balloon pops almost 4 minutes later (at 3:26:29) so we should be up around 100,000' in this frame.

I used a Chrome plugin called 'Frame by Frame for YouTube™' to find a frame where the horizon is about one pixel below dead center and it is very level. I then captured that frame at 1920x1080:

|

| RotaFlight Raw Weather Balloon Footage, ~3:22:50 |

I marked the center of the frame with a single yellow pixel, so you can see the horizon is either at or maybe 1 pixel below that mark. There are 38 pixels between the two red lines (both of which are 1 pixel high and absolutely straight across the frame) which mark the extents of the peak of our horizon and where the horizon hits the edge of the frame (±2 pixels as the horizon isn't perfectly sharp due to the thick atmosphere along the edge).

And again, since the limbs of the Earth shown here are below the center of the lens the distortion is, if anything, reducing the apparent curvature into a slightly flatter curve.

If you wanted a picture you could look at and verify the positive curvature of the Earth, this is that picture.

If you wanted a picture you could look at and verify the positive curvature of the Earth, this is that picture.

How close is that to what we EXPECT to see?

Common sense should tell you that, because of the enormous size of the Earth compared to our view, we would expect the visible curvature to still be very slight, even at 100,000 feet, especially with a more narrow Field of View as shown when we crop this image:

What we need to do is calculate is how much of a visual 'bump' the peak of our horizon should have over where the horizon meets the edge of the frame given our estimated altitude of 100,000', camera horizontal Field of View of 94.4°, and an image 1920 pixels across.

But why is there a visual bump anyway?

One thing we know from the study of Perspective is that a circle, when viewed at angle other than 90°, is going to appear as an ellipse that is more and more 'smushed' the steeper the angle at which we view it. Viewed on edge from the middle it's going to look 'flatter and flatter'.

In our case we are in the middle of this circle and we're viewing the edge at an angle of about 5.6° at this altitude, so it's going to be very squished, but it would still have a definite bump in the middle.

And to find that we need..

NOTE: we are using a spherical approximation of the Earth and ignoring atmospheric refraction in order to make our calculations manageable. But this means that for larger heights our estimates will diverge slightly from actual observations. Not by very much but keep in mind there is a small margin of error here as Earth's Radius will be slightly different by location, but it only varies by ~0.34%. I am also not using the same method as the Lynch paper as I could not find his equation for X at the time of this writing, I get only very slightly different results from Lynch using this approach. So to be clear, this method here is only a way to get a Very Good approximation of what you would expect to see, not a perfect calculation.

We are at point O (the observer) at about h=100000' (~18.9 miles) above an Earth with a radius R of about 3959 miles. When we look out to a point just tangent on the surface of the Earth, our horizon peak at point P will appear "a little higher" in our field of vision than the edges of the horizon do, at points B/C. This is easy to tell because we can draw a line from point OP and OK and there is clearly an angle between them. Point G is ground-level and point A is the center of our horizon circle.

Because line D is a tangent we know that it forms a right angle with the center of our sphere, this allows us to easily calculate all the distances and angles involved:

But why is there a visual bump anyway?

Perspective

One thing we know from the study of Perspective is that a circle, when viewed at angle other than 90°, is going to appear as an ellipse that is more and more 'smushed' the steeper the angle at which we view it. Viewed on edge from the middle it's going to look 'flatter and flatter'.

|

| Figure 2. image credit |

In our case we are in the middle of this circle and we're viewing the edge at an angle of about 5.6° at this altitude, so it's going to be very squished, but it would still have a definite bump in the middle.

And to find that we need..

Geometry

NOTE: we are using a spherical approximation of the Earth and ignoring atmospheric refraction in order to make our calculations manageable. But this means that for larger heights our estimates will diverge slightly from actual observations. Not by very much but keep in mind there is a small margin of error here as Earth's Radius will be slightly different by location, but it only varies by ~0.34%. I am also not using the same method as the Lynch paper as I could not find his equation for X at the time of this writing, I get only very slightly different results from Lynch using this approach. So to be clear, this method here is only a way to get a Very Good approximation of what you would expect to see, not a perfect calculation.

To help us get our geometry and nomenclature down I made some diagrams.

Because line D is a tangent we know that it forms a right angle with the center of our sphere, this allows us to easily calculate all the distances and angles involved:

|

| Figure 3. NOTE: 100,000' would only be ~2 pixels high at this scale! As shown, h is ~900 miles up. |

Here is our view from above (this one is to scale), showing the horizon circle in green, the edges of the horizon at points B & C, and the distance KP is our horizon circle Sagitta:

And here are the calculations from my GeoGebra Calculator showing the side view:

So in an ideal rectilinear lens we would expect to see approximately 41 pixels between the edges of our horizon circle (points B & C) and our horizon peak (at point P).

This is very good agreement with the image above where we have ~38±2 pixels using the Curvilinear lens which has slightly squished our curvature.

In this section we will look in detail at the mathematics for the above geometry and we will use this to then estimate what we expect to see in our camera.

We are given only a few values to start with but we can find all the rest using just the Pythagorean theorem using our two right triangles. After that we will step through the projection and calculate how many pixels we should observe which requires us to map our view into a 2D frame like our picture is. If you need help with some of the right triangle formulas here you can use this Right Triangle Calculator which will explain it in more detail (remember to put the 90° angle in the correct position and select the element you want to solve for).

So here are the solutions to the various line segments in our diagram. These are all fairly straight forward right triangle solutions. We mainly need D, H, & Z which we get from simple geometry. I've included some of the other calculations for reference only. Values for a, p, h, and R are given values.

So event at 100,000' our horizon is a mere 386 mile radius circle and we're just 19 miles above the center. So we are still only seeing a fraction of the Earth at a fairly slight angle, even from 100,000'. And a large portion of that distant horizon is actually compressed by perspective and tilted to our viewpoint, making it virtually impossible to see. The majority of the visible area where you can make anything out is considerably smaller than the full horizon distance.

Given this configuration we should then expect to see only a 2.6° difference between the horizon peak and the line formed between the edges of the horizon. That is slight but measurable.

But now we want to know if this matches our image.

|

| Figure 4. Overhead View of Horizon Circle - in GeoGebra |

And here are the calculations from my GeoGebra Calculator showing the side view:

|

| Figure 5. Side View of Geometry - in GeoGebra |

So in an ideal rectilinear lens we would expect to see approximately 41 pixels between the edges of our horizon circle (points B & C) and our horizon peak (at point P).

This is very good agreement with the image above where we have ~38±2 pixels using the Curvilinear lens which has slightly squished our curvature.

The Math

In this section we will look in detail at the mathematics for the above geometry and we will use this to then estimate what we expect to see in our camera.

We are given only a few values to start with but we can find all the rest using just the Pythagorean theorem using our two right triangles. After that we will step through the projection and calculate how many pixels we should observe which requires us to map our view into a 2D frame like our picture is. If you need help with some of the right triangle formulas here you can use this Right Triangle Calculator which will explain it in more detail (remember to put the 90° angle in the correct position and select the element you want to solve for).

So here are the solutions to the various line segments in our diagram. These are all fairly straight forward right triangle solutions. We mainly need D, H, & Z which we get from simple geometry. I've included some of the other calculations for reference only. Values for a, p, h, and R are given values.

| Variable | Equation | Value | Description |

|---|---|---|---|

| a | 94.4° | 94.4° / 1.647591 rad | Horizontal Field of View |

| p | 1920 pixels | 1920 pixels | Horizontal Resolution (pixels) |

| h | 100000 ft | 18.9394 mi | Observer Height (in miles) |

| R | 3959 mi | 3959 mi | Earth Radius (approximate) |

| ß | asin(R/(h+R)) | 84.408° / 1.4732 rad | angle at XOD (90°-ß) is angle from level to horizon point P |

| D | sqrt(h*(h+2*R)) | 387.7123 mi | distance to the horizon (OP) |

| Z | (D*R)/(h+R) | 385.8664 mi | radius of horizon circle also AP = D*sin(β); (((sqrt(h*(h+2*R)))*R)/(h+R)) |

| S | (h*R)/(h+R) | 18.8492 mi | distance to horizon plane from Ground also AG = R-sqrt(R²-Z²)) |

| H | S+h | 37.7886 mi | Observer Height above horizon plane also OA = D*cos(β); (((h*R)/(h+R))+h) |

| S₁ | Z*(1-cos(a/2)) | 123.6928 mi | height of the chord made by BC is given by KP, this is where our Field of View is used to find point K |

| u Horizon Dip | atan(H/Z) | 5.593° | angle from level to point P (this is the angle for OP, from slope of H over Z, also 90°-ß) |

| Chord Dip | atan(H/(Z-S₁)) | 8.202° | angle from level to point K (also atan(H/(Z*cos(a/2))), again, angle for the slope of OK) |

| Horizon Sagitta Angle | |Chord Dip-Horizon Dip| | 2.6086° | True/geometric angle between horizon peak and edges |

| Sagitta Pixel Height | *discussed below | ~40 pixels | Estimate in pixels |

So event at 100,000' our horizon is a mere 386 mile radius circle and we're just 19 miles above the center. So we are still only seeing a fraction of the Earth at a fairly slight angle, even from 100,000'. And a large portion of that distant horizon is actually compressed by perspective and tilted to our viewpoint, making it virtually impossible to see. The majority of the visible area where you can make anything out is considerably smaller than the full horizon distance.

Given this configuration we should then expect to see only a 2.6° difference between the horizon peak and the line formed between the edges of the horizon. That is slight but measurable.

But now we want to know if this matches our image.

3D Projection

Since we want to compare this to our photograph (Sagitta Pixel Height) we also need to know how many pixels high that is and this is where things get a little bit tricky.

We will run through this process twice. The first time looking straight out and the second time we will rotate our view to look slightly down directly toward our horizon peak (P) which is fractionally more accurate for the image we are looking at but also more complex.

For our 3D to 2D projection we can take 3D coordinates [x,y,z] and transform them by dividing x and y by the z value [x/z, y/z] which projects onto the plane z=1. We can show that this preserves straight lines by considering two points [0,100,100],[50,100,100] which trivially transform into [0,1],[0.5,1] - so we can see they remain along the same y value, therefore remain in a straight line - we've simply scaled them down proportional to their distance (which is how perspective works).

Geometrically, think about being in the middle of 4 equally spaced parallel lines which run out in front of you, as the distance (z) increases the points at different z values would simply get closer and closer to the center in the 2D projection. It is easy to see how [x/z, y/z] accomplishes this.

The second step then is finding the 'edge' of our frame. Since we know our Field of View (FOV) we can find that by locating the x-axis extent of a point lying along our Field of View (conveniently provided by finding the edge of our horizon).

Geometrically, think about being in the middle of 4 equally spaced parallel lines which run out in front of you, as the distance (z) increases the points at different z values would simply get closer and closer to the center in the 2D projection. It is easy to see how [x/z, y/z] accomplishes this.

The second step then is finding the 'edge' of our frame. Since we know our Field of View (FOV) we can find that by locating the x-axis extent of a point lying along our Field of View (conveniently provided by finding the edge of our horizon).

We make the origin be our camera at [0,0,0] and we are looking out parallel to the plane of our horizon circle along the z-axis (towards [0,0,1]) and our axes are oriented as follows:

x-axis = left(-)/right(+)

y-axis = up(-)/down(+)

z-axis = behind(-)/forward(+)

Locating our Points

Our horizon peak (P) is therefore found directly ahead (x=0), down at the horizon circle plane (y=H), and at a distance Z from our camera. This is not the total distance (D) from camera to point P but rather only the z-axis distance (Z).

P = [0, H, Z]

Which projects into 2D as:

P" = [0/Z, H/Z]

P" = [0, H/Z]

So far, very simple.

Where is point B? It is to the right at 1/2 the FOV angle (a/2) on our horizon circle. We can convert an angle and distance (radius of our horizon circle = Z) into coordinates using x=Z*sin(a/2), z=Z*cos(a/2), and of course our y value is the same as P, in the plane of the horizon circle at y=H. Here is a diagram showing our horizon circle and where the points are located on it.

|

| Figure 6. Overhead View of Horizon Circle with Equations for Dimensions |

This places Point B at

B = [Z*sin(a/2), H, Z*cos(a/2)]

and we again divide x & y by z to project into the 2D plane:

B" = [(Z*sin(a/2))/(Z*cos(a/2)), H/(Z*cos(a/2))]

B = [Z*sin(a/2), H, Z*cos(a/2)]

and we again divide x & y by z to project into the 2D plane:

B" = [(Z*sin(a/2))/(Z*cos(a/2)), H/(Z*cos(a/2))]

Finding the y-axis delta

Now that we have both of our points mapped into 2D coordinates:

P" = [0, H/Z]

B" = [(Z*sin(a/2))/(Z*cos(a/2)), H/(Z*cos(a/2))]

we just need to find the vertical (y-axis) difference between the 2D y-axis values, which is simply:

Δy = |y₁ - y₀|

P" = [0, H/Z]

B" = [(Z*sin(a/2))/(Z*cos(a/2)), H/(Z*cos(a/2))]

Δy = |y₁ - y₀|

Δy = [H/(Z*cos(a/2))] - [H/Z]

Simplified:

Simplified:

Δy = H/Z * (1/cos(a/2)-1)

We can also reduce the expression H/Z into terms of h and R by substitution:

H/Z = (S+h) / ((D*R)/(h+R))

H/Z = (((h*R)/(h+R))+h) / (((sqrt(h*(h+2*R)))*R)/(h+R))

H/Z = sqrt(h*(h+2*R)) / R

H/Z = D / R

Giving us:

Δy = D/R * (1/cos(a/2)-1)

H/Z = sqrt(h*(h+2*R)) / R

H/Z = D / R

Giving us:

Δy = D/R * (1/cos(a/2)-1)

Finding the Frame

Next we need to find the extents of our frame. Since the edge of the horizon is also the edge of our photo that rightmost point that gives us our greatest x extent, and we need to double that (to account for the left side) - we then divide by this quantity to scale our Δy value into a ratio of the whole frame. So 2 times the x value of B"" would be:

2*(Z*sin(a/2))/(Z*cos(a/2))

The Z cancels out leaving us with:

The Z cancels out leaving us with:

2*(sin(a/2))/(cos(a/2))

So we can now divide Δy by this extent value so we get a ratio

D/R * (tan(a/4)/2)

Find the Pixels

We finally only need to multiply our ratio by the number of horizontal pixels (assuming the pixels are square, or you would need to adjust for the pixel ratio) to give our final value

Sagitta Pixel Height = p * D/R * (tan(a/4)/2) = 41.07

Therefore, in an undistorted, rectilinear 1920 pixel horizontal resolution image with a Field of View of 94.4° we should expect to see approximately 41 pixels of curvature from 100,000'.

Already very close, but we're looking right at the Horizon rather that straight-out. Does that change our calculation? Well, yes, but only a little bit because in tilting the camera we've slightly change the point on the horizon which hits the edge of our frame.

Already very close, but we're looking right at the Horizon rather that straight-out. Does that change our calculation? Well, yes, but only a little bit because in tilting the camera we've slightly change the point on the horizon which hits the edge of our frame.

Rotated Coordinates

Now let's tilt our camera down so it points right at the horizon peak (P). To do this we will need to rotate our coordinates by our Horizon Dip angle along our x-axis (x coordinates remain unchanged but y and z should rotate) so that point P becomes directly ahead [0, 0]. Then we can repeat the calculations above.

The rotational matrix multiplication for x-axis rotation is:

⎢y⎥ ⎢ 0 cos -sin ⎥

⎣z⎦ ⎣ 0 sin cos ⎦

Given an angle u the equations to transform x, y, and z coordinates are:

x' = x

y' = y*cos(u) - z*sin(u)

z' = y*sin(u) + z*cos(u)

Since we are 'looking down' we need to rotate 'up' so our angle will be positive and equal to Horizon Dip to bring P right into the center of our frame at [0,0].

Our point P starts at [0, H, Z] and we need to rotate point P to be straight ahead, so our u angle:

u = atan(H/Z) = atan(D/R)

x' = 0

y' = H*cos(u) - Z*sin(u) = 0

z' = H*sin(u) + Z*cos(u) = D

P' = [0, 0, D]

and project into 2D:

P" = [0/D, 0/D]

P" = [0, 0]

That was easy because the rotation exactly cancels out the previous H/Z slope. But it's a good check that our rotation was in the desired direction.

And next we need to rotate point B, we start out in the same place as before (this introduces a small error because our rotated FOV would intersect the horizon circle at a slightly different point, I'm assuming a small rotation so the error is negligible and we can ignore this term).

B = [Z*sin(a/2), H, Z*cos(a/2)]

And we rotate by matrix multiplication, for our small angles the cos(u) term will the majority of the value, and we shift a tiny bit into sin(u).

x' = Z*sin(a/2)

y' = H*cos(u) - (Z*cos(a/2))*sin(u)

z' = H*sin(u) + (Z*cos(a/2))*cos(u)

and project into 2D by dividing x and y by z:

B" = [(Z*sin(a/2))/(H*sin(u)+(Z*(cos(a/2))*cos(u)), (H*cos(u)-(Z*cos(a/2))*sin(u))/(H*sin(u)+(Z*(cos(a/2))*cos(u))]

This is why you need special video cards to play games at high frame rates... and we've only rotated one axis.

Since point P's y value is 0 our Δy is therefore just our y value from B":

H*cos(u) - (Z*cos(a/2))*sin(u)

Δy = -------------------------------

H*sin(u) + (Z*cos(a/2))*cos(u)

Divide Δy by 2 times B" rotated x-extent (x/z) to get our ratio (I multiply by the inverse here):

H*cos(u) - (Z*cos(a/2))*sin(u) H*sin(u)+(Z*(cos(a/2))*cos(u)

------------------------------ * -----------------------------

H*sin(u) + (Z*cos(a/2))*cos(u) 2*Z*sin(a/2)

The first denominator and the second numerator cancel out leaving:

H*cos(u) - (Z*cos(a/2))*sin(u)

------------------------------

2*Z*sin(a/2)

and multiply by our horizontal pixel count to scale back to pixels, giving our formula:

H*cos(u)-(Z*cos(a/2))*sin(u)

Sagitta Pixel Height = p * ----------------------------

2*Z*sin(a/2)

In this case we get 40.9 pixels.

------------------------------

2*Z*sin(a/2)

and multiply by our horizontal pixel count to scale back to pixels, giving our formula:

H*cos(u)-(Z*cos(a/2))*sin(u)

Sagitta Pixel Height = p * ----------------------------

2*Z*sin(a/2)

In this case we get 40.9 pixels.

With the details of the lens "fish-eye" or curvilinear properties we could further transform our image to match more exactly. Since the horizon is near the center of the lens the distortion should be slight and working against the visible curvature I'll leave the lens correction as an exercise for the reader. The simplest method would be process the image using the Lightroom/Photoshop plugins that can do the lens correction automatically and then just measure the pixels.

Conclusion

Because the horizon is at or below the center line, which would flatten the curvature not exaggerate it, we can easily be certain that this footage shows that the horizon is convexly curved. We have also calculated what we would expect to see under ideal conditions and find a good match with our observation once we account for the actual lens distortions.

We can see that our lens, altitude, and FOV are all very important to figuring out what we expect to see and knowing what to expect is critical in evaluating what we actually see - especially through the eye of a camera lens. And that just because the camera image has some distortion it doesn't mean the photo tells us nothing about the scene. Indeed, this image very clearly shows the curvature despite the distortion working against it.

If you want to measure the curvature you have to be very careful and pretty high up.

And while 35,000' sounds pretty high up, here is what that looks like:

Online Lens Correction photo-kako

Howto Remove HERO3 Lens Distortion: youtube

PTLens

ImageMagick: Correcting Lens Distortions in Digital Photographs

Here is our image with correction applied to 'defish' the image and you can clearly see the inverse effect it has in the 'pincushion' appearance of the previous straight-lines (the progress bar and text). But as you can see, our curvature remains clearly pronounced because there is very little distortion in the center of the lens and because we've placed all of our horizon below center the evil 'fish-eye' was actually slightly flattening out the real curvature.

There it is folks -- the curvature of the horizon at ~100,000'

The Bad News/Good News

Of course, this only demonstrates that our horizon is a circle of a radius entirely consistent with the oblate spheroid model of the Earth. What we have done here is calculate how big we would expect that circle to be given our altitude above a spheroid and how that should appear in a camera lens given some field of view.

Measurements and observations can only lend weight to any model, they never "prove" it, as Flat Earthers are so fond of claiming. Rarely is this more clear than the observation that just because a photo makes it look flat (or curved), doesn't mean it is actually flat (or curved). It just means the curvature is hard to measure, especially at lower altitudes (in this case, due to the immense size of the Earth) and in the face of lens distortion. But we have taken these into account in this analysis so we can be fairly sure we are seeing actual curvature of the horizon.

But I see no way that this view would be consistent with a Flat Earth model, there is no known physics that would explain the observed size of this observed horizon on a Flat Earth. We are well above the majority of the atmosphere here so we would be able to see MUCH further if the plane below us were flat.

Thanks to doctorbuttons for pointing out the disagreement with Lynch in my original calculator and to Mick for his contribution of the 3D to 2D transforms.

[¹] Lynch, David K. "Visually Discerning the Curvature of the Earth." Applied Optics 47.34 (2008): n. pag. Web. 09 Sept. 2016.

[¹] Lynch, David K. "Visually Discerning the Curvature of the Earth." Applied Optics 47.34 (2008): n. pag. Web. 09 Sept. 2016.

Subscribe to:

Comments (Atom)